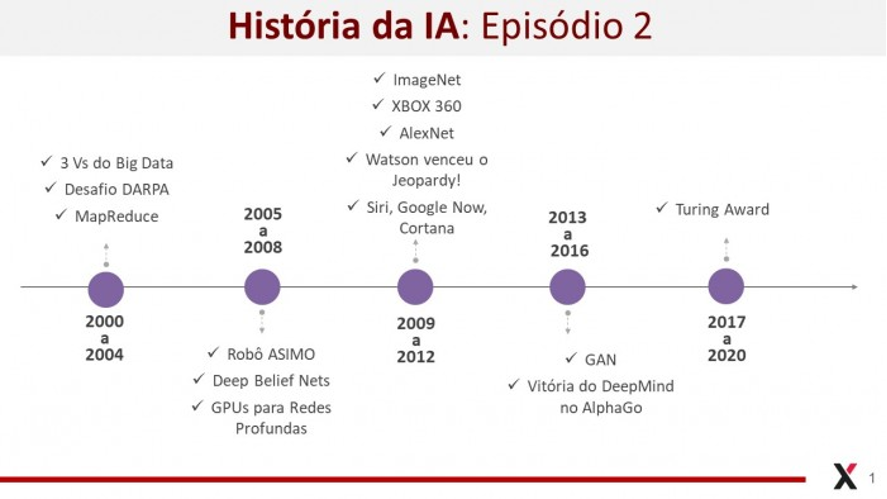

EPISODE 02 – WHERE WE ARE: 2000 to 2020

(In our first episode we brought a brief account of the origins of AI (1940 to 2000) focusing on some historical landmarks in the area. If you haven’t read it yet, I invite you to start your reading there before reading this post.)

The first two decades of the new millennium ushered in a new phase in AI, marked by significant advances and a wide penetration in the productive sectors of the economy.

The United States Defense Advanced Research Projects Agency (DARPA) launched a Grand Challenge for the development of autonomous vehicles in 2004, and in 2005 a Stanford University autonomous vehicle won the challenge by driving in a desert for 131 miles. At the same time, Honda developed the humanoid robot ASIMO, capable of walking and interacting with humans, and recommendation systems gained a lot of expression, mainly as tools for optimizing the shopping experience and personalization in e-commerce.

In the same period, we had important evolutions for the consolidation of what came to be known as Big Data. Of course, the history of Big Data can be traced back to a much more remote time, but we will focus here on the events that led to the explosion of the area. In 2000 Seisint Inc. developed a distributed file-sharing framework, and in 2001 the Gartner Group (then called the Meta Group) published a report outlining challenges and opportunities for growing data as a 3D element, increasing the volume, speed, and variety of data. This 3D element became known as the 3Vs of Big Data. In 2004 Google published a paper on a process called MapReduce and an open-source implementation called Hadoop was made and had wide visibility. Big Data also gained a lot of traction with the increase in web traffic and the development of mobility, which promoted an exponential increase in the amount of semi- and unstructured data generated and stored, mainly multimedia data such as texts, images and videos.

In these two decades, we cannot fail to mention the relevant advances in deep learning), whose initial history is intertwined with the history of neural networks already narrated in the first episode of this series. It is worth taking a small step back in time and highlighting the proposition of recurrent networks LSTM in 1997 by Sepp Hochreiter and Jurgen Schmidhuber, who changed the history of deep learning. In 2006 Geoffrey Hinton, Ruslan Salakhutdinov, Osindero and Teh published an article proposing a fast-learning algorithm for networks called deep belief nets. In 2008 Andrew Ng ‘s research group at Stanford University started using GPUs to train deep networks, increasing the processing speed of networks by orders of magnitude. In 2009 another Stanford group, this time led by researcher Fei-Fei Li, launched the ImageNet database, with 14 million labeled images for community use. In 2011 Yoshua Bengio, Antoine Bordes and Xavier Glorot published a paper in which they showed that a specific type of activation function could solve the numerical problem caused by very low gradient values, and in 2012 AlexNet, an implementation of convolutional networks on GPUs, promoted a leap in accuracy in the performance of deep networks applied to Imagenet data. In 2014, Generative Adversarial Networks (GAN) emerged, proposed by Ian Goodfellow and in 2016 a deep reinforcement learning model created by the company Deepmind won the world champion in the game Alphago.

In the last decade, AI algorithms and solutions have become part of many systems and products, solving complex problems in areas such as speech recognition, robotics, medical diagnostics, natural language processing, logistics and operations research, anomaly detection (e.g., frauds, crises, disasters, etc.) and many others. To illustrate some of these developments, we can mention that in 2011 IBM’s Watson won two great champions, by a wide margin, in a quiz contest on the Jeopardy! program. A year earlier, Microsoft had released Kinect for the XBOX 360 game console, based on machine learning technology for motion capture. Apple’s Siri, Google Now and Microsoft’s Cortana have also emerged as AI solutions, more specifically natural language processing, with the aim of answering questions and providing recommendations for users.

In addition to the greater scientific rigor of AI, high data availability and the possibility of contracting storage and processing on demand, I want to highlight another factor that has contributed to the acceleration of the area in recent years. Giant tech companies such as Google, Amazon, Microsoft, Apple, Facebook, Intel and others have started to open up some of their technology to the community. Frameworks, infrastructure, toolkits, platforms, etc., were made available openly so that professionals, companies, entrepreneurs and educational and research institutions could use and advance knowledge and technology transfer. Keras, TensorFlow, NLTK, Spark, Scikit-learn, DAAL, Gensim, MongoDB, and Tika are just a few examples of these.

Finally, a striking aspect for the advancement of AI in the new millennium was the fact that research in the area began to delve into more conceptual and theoretical aspects, ceasing to be a mostly empirical discipline and becoming a more scientific area. The application of mathematical knowledge and formalisms, mainly linear algebra, probability and statistics, economic theories and mathematical programming (operational research), brought greater rigor to artificial intelligence. The greatest scientific recognition for AI came with the Yoshua Award Bengio, Geoffrey Hinton and Yann Lecun in 2019 with the Turing Award for research involving deep learning.